[Answered] Is Character AI Safe for Everyone?

Overall Safety Rating: ⭐3.0/5

According to The New York Times report, "The mother of a 14-year-old Florida boy says he became obsessed with a chatbot on Character.AI before his death." The mother says the company’s chatbots encouraged her 14-year-old son, Sewell Setzer, to take his own life, according to the lawsuit.

While Character AI is popular, some users may be wondering: Is Character AI safe? How safe is Character AI? Today, we're taking an in-depth look into Character AI to see if it's truly a safe platform to use for adults and kids alike.

At its core, Character AI is a platform that allows you to create and interact with AI characters. From video game characters, fictional protagonists, superheroes, villains, celebrities, historical figures, and much more. The chatbot takes on the persona and responds to your questions and messages as if they were the character.

Here's a quick run-down on some key features:

With all these features, some of you may worry about the safety of Character AI. We will break it down in the next part.

In this part, we will discuss the safety features of Character AI, and also explain if these safety features are truly secure and reliable.

In terms of filters and moderation, Character AI employs a variety of tools to ensure the quality control and reliability of their AI chatbots. They do this by integrating features like automated tools that block 'violating' content before it can go live in the community.

Their team is actively moderating their chatbots and taking appropriate action when they find flagged content. However, they've also noted that the tools 'aren't perfect' but will be improved over time.

The Character AI team is also working on building a 'Trust & Safety' team consisting of both internal personnel and outsourced moderators who will have access to technical tools with the power to warn users, ban and delete content, and suspend/ban users.

Editor's Verdict: ⭐⭐⭐⭐

In terms of moderation, Character AI seems to have it figured out, and implement the Automated tools and a team to filter content that is harmful to the site.

But considering that the team and features are still actively improving, there is a gap where before they're fully implemented, the chatbots can be seen as a potential safety risk.

Character AI has multiple methods for users to report on both Character AI chatbots and other users if they notice behavior that violates Character AI's Terms. These include tools to flag and report group chats, characters, and specific messages with AI or other users.

Character AI has highlighted various content rules to dissuade users and their chatbots from exhibiting. This includes content in these categories:

Editor's Verdict: ⭐⭐⭐

In terms of their content controls and guidelines, the rules are pretty clear. Users can report content that they find inappropriate, offensive, or harmful, and Character AI will review the flagged content, then take actions, such as removing the content, warning, or even banning the account. These eliminate the chance of users accessing harmful content.

However, different people filter content based on their comfort level, the AI characters can sometimes generate unexpected responses, which may not always align with the standards.

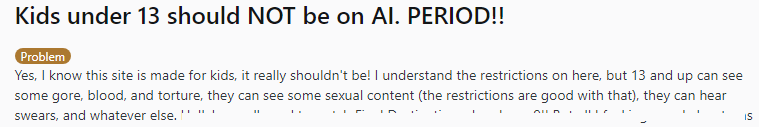

According to some users, there is always the potential for inappropriate content to slip through the moderation system, especially in real-time interactions. It's not safe, especially for kids because they can see some fore, blood, torture, and sexual content.

Under their guidelines, Character AI's chatbots follow key principles for AI-generated content, with chief among them responses from the chatbot should 'never' include something 'likely to harm users or others'.

Their chatbots are also designed to protect a user's private information so that whatever is mentioned in the chat is not used against them negatively.

In short, they aim to fine-tune their Characters' AI models to follow the same standards as their users mentioned above. Character AI has also underlined how its AI model is trained and has assured users that their models undergo 'extensive testing' to improve AI behavior.

This includes working with quality analysts to understand and improve safety & quality, using 'signals' from quality analysts to align the model to user preferences, and including an 'additional safety overlay' on the finetuned model to produce safe & high-quality responses.

Editor's Verdict: ⭐⭐⭐

This promise as they've mentioned is 'aspirational'. While there are stringent rules in place for both users and AI to follow, at best, these are assuming the ideal scenario where the user is actively not trying to circumvent the rules.

According to our test, we don't see the settings for me to control and filter over Character AI's behavior, so there is a chance that the AI could generate inappropriate, harmful, or confusing responses. People, especially children, couldn't recognize these behavior as improper and could be misled.

Character AI has multiple privacy measures in place and is constantly adding to its repertoire to ensure your data and chats are protected, including:

Editor's Verdict: ⭐⭐⭐⭐

For the most part, Character AI's privacy protections are up to industry standards but certain aspects can be a little concerning. In particular, regarding 'sharing and selling user data', they mentioned that "If that ever changes, we'll be very clear about it and get our users' consent upfront.".

Additionally, after skimming through their privacy policy we noticed there are instances where if a Character you created and set to public gains enough popularity, they have the 'right to preserve' the Character regardless of whether you delete your data and account. This suggests you might lose you control over your data.

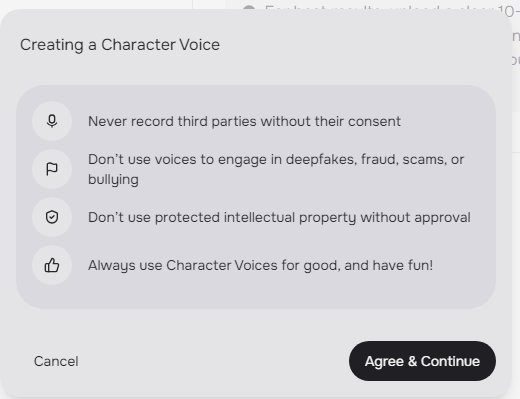

The new 'Character Voice' feature allows users to get on a 'call' and have a two-way voice conversation with their selected Character persona. But this comes at the cost of new safety concerns where the AI may say something inappropriate or harmful.

To address these concerns, Character AI has a list of controls and measures in place regarding the Character Voice feature:

Editor's Verdict: ⭐⭐⭐

Character AI follows strict data privacy regulations, and the voice call feature is expected to do so. It allows a rich emotional connection between users and the AI characters. However, for emotionally vulnerable users, it may increase the risk of forming unhealthy attachments to AI characters.

In terms of age verification mechanisms, the process isn't as secure as we hoped.

Character AI does have an age requirement to use their chatbots; within the U.S. and other countries, you need to be over 13 years old, and if you're an EU citizen or resident, you need to be over 16 years old.

Editor's Verdict: ⭐

We gave the signup process a try ourselves with two tests. First of all, we sign up an account and select an age of under 13 years old, and Character AI doesn't allow to sign up. Secondly, we sign up an account and select an age older than 13 years old, and we succeed.

There's no age verification for the signup process. This isn't specific to Character AI, but it does show that it's incredibly easy to 'fake' your age to sign up to use their Character chatbots.

We also find that the Apple's App Store set the Character AI app to be 17+ years old, which means the platform should have a more strict age restriction, suggesting it's not an app for children.

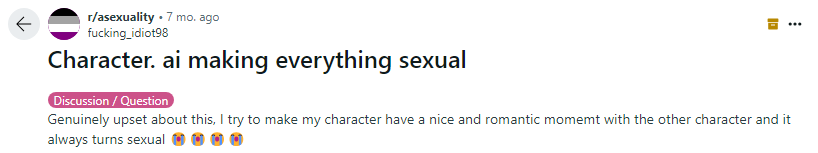

For the last safety feature, we wanted to examine how Character AI deals with NSFW (not safe for work) content like pornography, violence, illegal activities, etc.

To start, Character AI is adamant that while NSFW is a 'large umbrella', they will not support pornographic content as it is against their terms of service.

This includes considerations for the future and they are not planning on allowing pornographic content. However, they are open to allowing some forms of 'NSFW' content to support a broader range of characters, like villain characters in movies.

They've also reiterated their stance against vulgar, obscene, and pornographic content is final, and requesting NSFW filters to be removed for these reasons will result in a user ban.

Editor's Verdict: ⭐⭐⭐

All that said, there are known instances where users have been able to circumvent the NSFW filter with a workaround so it's not feasible to assume that users will not come across pornographic chats.

We have also seen some users have met such situations where the AI characters turns sexual because these AI chatbots learn from what the users say to them.

Here are some real reviews of Character AI to gauge how it works:

"Character.ai has really changed my life and had a positive impact on me. The AI bots on this platform are not only intelligent but also incredibly intuitive, understanding my needs and providing meaningful, personalized interactions." - By Aliyah from TrustPilot

"The AI are (usually) very smart, and talking to them is quite fun! However, there are issues." - By S.K.B KMC from App Store

"While Character AI is a fun use of artificial intelligence, its marketing towards children is fraught with risks." - By iiCapatain from Reddit

Overall rating for Character AI's Safety: ⭐⭐⭐

Overall, reviews are a mixed bag with Character AI lamenting how its current state after implementing the recent NSFW filter seems to have made it a shadow of what it once was.

But this is good news since it means that it's safer for children to use. Not to mention there are positive effects as a tool for lonely people looking for a place to vent, discuss their problems, and find advice.

From our point of view, Character AI can be a force for good by providing users an outlet to be heard, and its intuitive and intelligent chatbots are fun to chat with. But the problem arises when there's a disconnect between the developers and what users want as well as the unpredictable nature of AI responses.

Now, we will discuss and come to conclusion on whether Character AI is safe from two aspects:

For adults and general users, Character AI is safe for them to use, as Character AI has implemented several safety measures to protect users and data, such as content filter, reporting system, and AI behavior control.

You also have a variety of tools for moderation, blocking content you don't want to see, and a report system in place. However, since AI systems are still evolving, we can expect there to be some inappropriate content to slip through even with stringent filters.

Otherwise, Character AI's data privacy and safety are generally in line with industry standards and should be safe to use as long as you don't share too much detailed personal information.

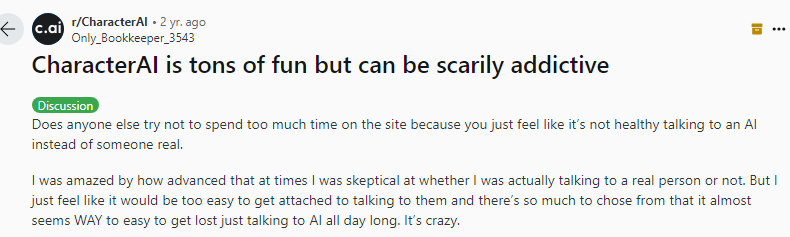

Many users have found it helpful and fun as a way to seek for companion and advice, but watch out the addiction, because some users find them addicted to this platform and spend too much time on it, just like these users on Reddit:

Character AI is safe for general adult users, but for people with depression or mental illness who seek professional psychological counseling and help, Character AI is not a safe choice. It may cause addiction and cannot provide professional advice, which may cause irreversible consequences.

Simply put, I think Character AI is not safe for kids.

Here are both opportunities and challenges for children to use Character AI:

Explicit Language and Themes:

Character AI has stringent guidelines that prohibit a variety of obscene, pornographic, and violent interactions.

However, we should note that these filters aren't perfect and can be circumvented depending on user inputs. We'd advise parents to closely monitor their children's interactions to ensure they're engaging in age-appropriate content.

Age Restrictions and Verification:

To use Character AI, users have to be at least 13 years old (16 in the EU). But based on our testing, age verification can be easily circumvented which is a significant concern for child safety.

Also, the age rating for Character AI from Apple's App Store is 17+, which is another proof that this app is not appropriate for children under 17 years old.

Educational Value vs. Entertainment:

Some people use Character AI as a learning platform, especially it features voice call. Children can use it to learn about historical figures, new languages, translate text, and more.

But, given the fact that Character AI's value tends to skew more towards the 'entertainment' aspect of AI chatbots, more people, including children, are probably going to chat with these chatbots for entertainment.

So, if you want to use AI chatbots as a learning tool, there are better options such as ChatGPT.

Screen Time and Addiction:

The last point is the biggest cause for concern because Character AI's chatbots can be incredibly engaging, leading to an increase in screen time and possibly addiction.

If an AI character is designed to be particularly empathetic or supportive, children may turn to it regularly for emotional support, which might increase the risk of social isolation.

We've found some real users who also think that Character AI should not be used by children. Children are not mentally mature enough to distinguish whether it's face or fiction said by AI chatbots, nor can they properly better realize the online virtual world and reality.

More importantly, many adults using Character AI found them addicted to the platform, spending much time with the screen. This can be worse for children because they can be addicted easily to the platform and to the screen time usage.

Unfortunately, Character AI doesn't offer parental control for children to use, so it's unclear how effectively can parents monitor the AI chatbot use from kids.

Editor's Conclusion:

Character AI is not safe for children to use considering the chances of inappropriate or harmful content can slip through the filter, and children are likely to see these kind of content from AI chatbots and the communities.

Moreover, children may be addicted to using Character AI, which is not a good practice for their physical and mental development.

How to Deal with That?

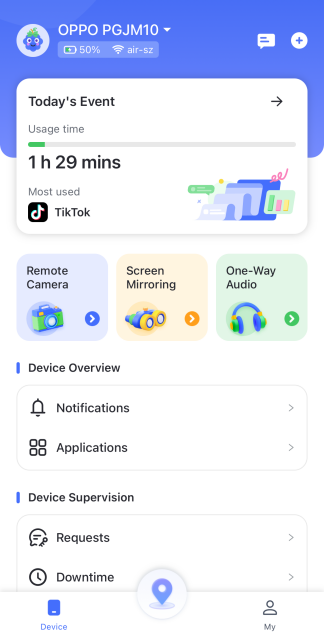

If children are going to use Character AI, it's advisable that they do so under parental control. If you are a parent who worries about your kids' online safety and would like to keep an eye on it, AirDroid Parental Control can help you with that.

It can sync the notifications from your kids' phone, check the device location, and screen mirror the phone screen, thus you can monitor their online activities and ensure safety.

To use AirDroid Parental Control to monitor kids' using Character AI:

1: Install AirDroid Parental Control on your device, and sign up an account.

2: Follow the on-screen instruction to bundle the kid's phone and set up.

3: Now you can limit your child's device usage, block apps, and see the notifications.

To conclude, is Character AI safe? It's really up for debate at this point because it has both positive and potentially harmful implications.

In my opinion, for adult users, Character AI is safe to use to have fun and seek for advice, but there is a risk that the platform may use the user data to train their AI chatbots.

For kids, I don't think it is safe enough for kids and young teens to use Character AI. The AI chatbot can sometimes slip through the filter and generate inappropriate or harmful content, and there is a risk of addiction and excessive use of screen time. If children want to learn something from AI, consider alternative AI chatbots such as ChatGPT or Claude AI.

Leave a Reply.